FeminismPoliticsQuickiesSkepticism

Quickies: North Carolina–Come to Have Your Drink Legally Drugged; Stay to Be Legally Raped!

Plus knitting gender disparities, securing deep-learning models & paying off Morehouse student debt

- North Carolina: Where ‘No’ Doesn’t Mean ‘No’ When It Comes to Rape and Sexual Assault, by Dara Sharif: “Yes, in the year 2019, in North Carolina, once a person consents to sex, there are no backsies. People can’t change their mind. So, depending on the timing and how a date night might progress, ‘no’ doesn’t mean ‘no.'” Sharif quotes an earlier AP article pointing out, “In North Carolina, it is legal to have nonconsensual sex with an incapacitated person if that person willingly got drunk or high. It is also legal to drug someone’s drink” (my fucking emphasis).

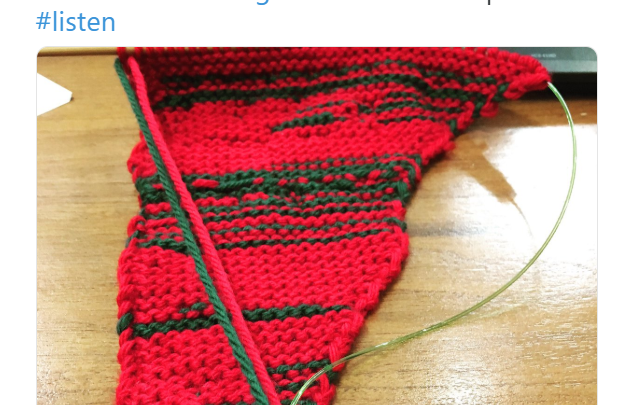

- Mayor’s Knitting Shows Men Still Dominate Debate, by Matthew Robinson: “Sue Montgomery, a city councilor and borough mayor in Montreal, has opted to sit back and knit during monthly executive committee meetings. Concerned about the disparity of female and male voices during the meetings, her latest creation details how often men speak in red and women in green. And the results reveal a worrying finding: that men still dominate public discourse.”

- Congrats, Grad!: Morehouse Keynote Speaker Pays Off Debt of Graduating Class, by Ibn Safir: “Robert F. Smith, the billionaire tech investor and philanthropist . . . announced that his family would provide a grant to pay of the student debt of the entire graduating class of 2019.”

- How We Might Protect Ourselves from Malicious AI, by Karen Hao: “Currently, we let the neural network pick whichever correlations it wants to use to identify objects in an image. But as a result, we have no control over the correlations it finds and whether they’re real or misleading. If, instead, we trained our models to remember only the real patterns—those actually tied to the pixels’ meaning—it would theoretically be possible to produce deep-learning systems that can’t be perverted in this way for harm.”

The article about misleading (not actually malicious, thank you headline writer) AI is a perfect example of “human” dysfunctional thinking. The AI systems have discovered p-hacking!

The author says that training AI systems (actually, AI vision systems, though I think the same effect could happen with any sort of pattern-recognition system) by exposing them only to “real” correlations, they are much less vulnerable to generating false conclusions. But how do you recognize “real” correlations and how do you ensure that the AI is picking out real versus misleading or imaginary correlations? The answer, which took humans (and baby dinosaurs, thank you Mary!) millions of years to develop and is still far from perfect, is “science”. Don’t just find statistical correlations, use them to develop hypotheses and then test the hypotheses. Repeat.

This reminds me of the distinction the SBM people draw between science-based medicine and evidence-based medicine. You need a comprehensive theoretical basis to ensure your statistical models aren’t just based on chance. The self-driving cars need to talk to each other so if three of them think it’s a stop sign and four of them think its a 45MPH sign, they at least know the matter is in dispute and to proceed with caution. And hopefully not with scattering experiments.

Thank you for clarifying that article! I was confused by the headline, but I thought the article was interesting to ponder. Eliminating human bias in creating models (or, more realistically, minimizing it) also seems like one of the biggest problems with deep-learning models (as with surveys, study design, etc.).

I think the headline was going for the “ROBOT UPRISING” trope.

Hi again Buzz, long time no see! Your points are well made.

I reckon the biggest piece of human bias that needs eliminating is the blind faith of developers in their new gee whiz software that turns out to be poorly designed and implemented, rushed into production well before it is ready and before seeking input from actual local experts in the field to which it is to be applied.

Example: practically every piece of government software ever. Always they go for the cheapest quote and fail to buy the modules that actually, you know, make the system work. Systems are then retro fitted on the fly with the result that the system always remains a badly cobbled together and buggy nightmare. Yes I am a cynic on this.