PayScale Responds

Last month I wrote a take-down of the PayScale study on the gender wage gap. Today PayScale tweeted to me to let me know they posted a response.

I don’t want to spend too much time on this because I don’t really think the response adds much I didn’t already write in my last post, but I’ll address a couple points and do encourage you to go read it yourself.

I think PayScale fairly summarized my critiques but their responses either were a rehashing of the results of their study or provided extra but baffling evidence to show where I was wrong. They also seem unhappy that I originally contacted them for more information about the study methodology via Twitter where they were restrained to only 140 character responses. My only response to that is that if they actually put basic information about their study methodology in the section they labeled “methodology” I would have no need to contact them at all.

One of the big things PayScale says I got wrong was the sample size. I wrote in my post that they had a sample size of 13,500. I was getting this from their methodology where they write “The findings, which focus on gender ratios, job level, industry and job category breakdowns for the above questions, are based on a sample 13,500 respondents who are working in the United States.”

In their response, they claim that the data used in the study actually came from “several million users over the last year” and that the 13,500 were just a smaller sample group that was asked a couple extra questions. They also provide a link that I thought would take me to more information about the survey they conducted on several million users, but when I clicked it merely brought me to the front page of PayScale.com. Who these million users are, how and when they were surveyed, whether they are all PayScale clients or a random group of individuals, and why none of this is mentioned in their study methodology is unknown. I can make the assumption that their data is probably coming from the “free personal salary report” they advertise on their website, but they got annoyed last time I made assumptions so I’ll refrain.

Most of the rest of PayScale’s response seems to be a closeted sales pitch, however they end it with the following statement: “We know the conclusion of the Gender Wage Gap study is controversial. It goes against what all of us have heard about the difference in wages between men and women for a long time.”

Remember, PayScale’s conclusion was that the wage gap between men and women in the US is smaller when you control for occupation than when you don’t. If they think this theory is controversial at all or that they are the first to ever think to control for occupation when comparing salaries they are delusional.

PayScale basically did a study on whatever data they had lying around, found some results that are similarish to the real research on that topic, then marketed the shit out of it while providing no actual background or methodology about how they got their results, resulting in free advertising for them on terrible news websites and providing fodder for MRAs who want to claim incorrectly that the gender wage gap is a myth. The only thing “controversial” about this study is the intellectual dishonesty of the company that funded it.

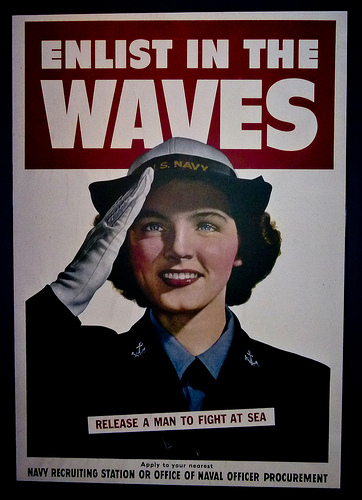

Featured photo by Jamie Bernstein

“Who these million users are, how and when they were surveyed, whether they are all PayScale clients or a random group of individuals, and why none of this is mentioned in their study methodology is unknown. I can make the assumption that their data is probably coming from the “free personal salary report” they advertise on their website, but they got annoyed last time I made assumptions so I’ll refrain.”

Actually that would be a good assumption. Their link directs to the on-file report that they have for your IP address, as it did for me after I had filled one out (I wasn’t redirected to the homepage). They’re also very strongly hinting to that being the source of their information.

The first page in their salary report creator contains a “People Like You” counter that shows you how many others are classified under the job title that you type in, and we can maybe get a good idea of how large their sample sizes are for those particular jobs:

Software Architect: 4297

Pharmacist: 13149

Civil Engineer: 48895

Secondary School Teacher: 19513

Executive Chef: 16904

Social Worker: 11595

Chief Executive: 30830

Registered Nurse: 23589

Physician: 26917

HR Administrator: 17260

Certified Public Accountant: 29823

Secretary: 6233

However, this search feature is extremely rudimentary. It searches only for very particular words in whatever you type. So, for instance, “Civil Engineer” is actually returning the results for only “Civil;” “Engineer” gives a result of 69889. There are also 19513 “Secondarys” and 12605 “Teachers.” There are also almost 30,000 race car drivers (but the same number of drivers).

And so what can we conclude from these numbers? Maybe that the listings for pharmacist, secretary, and physician are generally correct. We can’t tell for the others, and would actually be best off concluding that they’re simply not indicative of their actual database.

So we still have this unresolved problem in their methodology. That they won’t release even basic numbers like the sample sizes of the choice 12 careers that they gave is pretty disappointing. And at this point I don’t really see a point in trying to pursue this further; they’re adamant about keeping their “competitive advantage” and won’t give up any of their methodology or regression algorithms, they’re not going to specify their sample sizes, and we cannot in good faith draw any conclusions about their study’s validity other than that it’s at best questionable. But ’tis the issue with for-profit science.

Another question is whether it’s reasonable to assume there isn’t some bias in who chooses to use this “service” over a true random sample.

Amusingly, to me, glassdoor published an article back in 2009 confirming the wage gap which payscale is denying.

http://www.glassdoor.com/blog/engineering-pay-gap-glassdoor-reveals-many-women-engineers-earn-less-than-men/

There is almost always such a bias, and its what makes people really annoyed with, for example, “human psychology, as applied to evolutionary development”, and similar subjects, where the “entire” sample set tends to be 18-25, college students, at 5-6 universities, instead of like.. the any of the other 2.999 billion people on the planet, including the ones living in places where you won’t find “farms”, or “money”, or even a coherent definition of words like “marriage”. People who, in other words, may, in some respects, be just as much “outliers” in some studies, on some subjects, as the bloody college students are. lol

>> “However, men and women are >inherently different< and thus…"

Instant faulty assumption fail. (The bias is strong in this one.)

>> “our team of data scientists”

Can anyone clarify if this is actually a thing? I mean I know analysts and statisticians do data mining (and I’ve worked with large databases in aggregate myself as a programmer) and all scientists use data in some form or another, but isn’t this phrase a bit like “soil gardener” or “equation mathematician”? (space astronaut!?!)

Haha!

Data science is a pretty silly term, but it is actually a thing: https://en.wikipedia.org/wiki/Data_science (though maybe more of a thing in industry than in the academy).

Basically its an interdisciplinary approach to looking for patterns in large datasets, as opposed to, e.g., doing experiments to produce more targeted datasets intended to reveal deeper patterns in nature.

Thanks biogeo, yeah, I’ve heard of “data science” during a business intelligence seminar, I just didn’t think people would take that title.. also headdesk for not using google/wiki.

I really enjoyed your take-down article. I thought your points about the lack of transparency concerning their methodology were spot-on. There are several other companies that essentially do the same: provide “unconventional” answers to dubious studies that encourage users to buy their product (and hopefully repost their innovative new findings on twitter/facebook…maybe even get a TED talk for making the world smarter by challenging conventional wisdom!). But as we both know, what PayScale has provided cannot be considered a study in the scientific sense, even if they do operate some form of quid-pro-quo transparency.

With that said, I thought your critique of their sample size was well reasoned, but somewhat off base. For one, the idea that an ever increasing sample will concurrently bring us closer to the truth is a fallacy of big data. In truth, being able to do a pairwise comparison of jobs even on a smallish sample should be enough, so long as the methodology is sound. Using a larger random sample won’t solve the problem if the methodology is garbage. Going back to your points about transparency, we have no idea if these comparisons are statistically significant, the size of the confidence interval, etc. Likewise, multiplying their 13,500 participants by 1% (the estimated proportion of the labor force who are secondary school teachers) certainly gives a small number, but as you correctly (and immediately) point out we really can’t be sure what the samples sizes actually are. So a small sample size can’t be the underlying problem with the study because we have no idea what the sample sizes are. Again, you do a great job pointing out that there’s a lot to be demanded from the study.

That said, my problem with PayScale’s study and conclusions is that they aren’t really challenging conventional wisdom concerning the gender wage gap. Even if we give PayScale the benefit of the doubt, their study doesn’t address the issues brought up in the study by the AAUW. Sure, a pair-wise comparison salary in each job might show some equality, but the apples-to-apples comparison effectively removes the ability of capturing any other potential bias in the system. The AAUW study on the other hand compares median salaries overall and between different sectors of the economy. This is a far better idea because if there is an inherent, systemic difference between not only how much women are paid, but also within job mobility and availability, we haven’t eliminated their signals from the sample. I hope that makes sense.

+1 for methodology nerdiness. <3!

As I looked at their study again, I see that PayScale uses median salaries after controlling for other factors. However, again, I feel a certain amount of controlling for factors might effectively obfuscate important signals. That said, I was went through the 12 industries they chose to highlight and it appears that 9 of the 12 show men making more money than women. PayScale’s contention is that this is proof the gender gap isn’t really all that bad. But as Jamie points out above, we know that within certain industries the gap isn’t as broad – that’s hardly new information. It seems like PayScale hopes you just pay attention to the line charts on the front page and don’t dig deeper.

“That said, I was went through the 12 industries they chose to highlight and it appears that 9 of the 12 show men making more money than women.”

All show them making more than women for the “uncontrolled” salaries. All but one show them making more than women when “controlled,” that one job being where they share the same median salary. So, I’m not quite sure where you’re getting that number(?). Even the number of jobs of the 12 where the pay control is negative (women are scaled down) is 4 of the 12 (and ~43% of the total jobs amongst all those categories, if we assume i.i.d.), so I’m confused.

And your point about sample size is correct, but it might be best to make the distinction in the types of error reduction that are effected by a larger sample size. Bad methodology, non-response bias, and type i/ii errors are all different and have to be quantified differently (almost impossible for non-response bias, the biggest problem with their study).

I got 9 of 12 by going through the job sector placards (for lack of a better term) and counting the total positive differences, which I would think they derived by using the controlled figures. (See: http://www.payscale.com/data-packages/gender-wage-gap/job-distribution-by-gender) But if I’m misunderstanding something it wouldn’t be the first time.