The Dunning-Kruger effect: Misunderstood, misrepresented, overused and … non-existent?

Just stop using it!

A couple of weeks ago a statement popped up in my Facebook feed that surprised me.

New evidence suggests that the Dunning-Kruger effect doesn’t exist – people who don’t know what they’re talking about are aware of that fact.

The post came from the QI Elves who delight in posting quirky and unexpected scientific trivia, but rarely include sources and occasionally get fooled by “press release”-science. They are very popular though, so I thought I should look into the claim, which sent me down a rabbit hole or a complete rabbit warren. After reading more social psychology and education science papers than I care to ever do again, I’ve come to a few conclusions that I’d like to share with you all.

1. The Dunning-Kruger effect is a mess

The original paper

If you’ve never been curious about where the term comes from, you have presumably also never read the 1999 paper by social psychologists Justin Kruger and David Dunning that first described the effect. And even if you have read it you likely need a very quick refresher. Kruger and Dunning did four studies, one on recognizing humor, two on solving logic puzzles and one on knowing grammar, where they compared the results volunteer psychology students got on a test in the domain, with the same student’s self assessments related to the test.

Their results showed that on average the students overestimated themselves and that this was chiefly due to the self-assessments of the lowest scoring quartile, while the highest scoring quartile slightly underestimated their own performance. Their hypothesized explanation was that for certain domains, the skill required for self-assessment is also required to succeed, leading those who lack to skill to be “Unskilled and unaware of it” (the title of their paper).

Just for some domains, mind you, as they state in the paper:

We do not mean to imply that people are always unaware of their incompetence. We doubt whether many of our readers would dare take on Michael Jordan in a game of one-on-one, challenge Eric Clapton with a session of dueling guitars, or enter into a friendly wager on the golfcourse with Tiger Woods.

We have little doubt that other factors such as motivational biases (Alicke, 1985; Brown, 1986; Taylor & Brown, 1988), self-serving trait definitions (Dunning & Cohen, 1992; Dunning et al., 1989), selective recall of past behavior (Sanitioso, Kunda, & Fong, 1990), and the tendency to ignore the proficiencies of others (Klar, Medding, & Sarel, 1996; Kruger, 1999) also play a role.

Public perception

It just fits so neatly with our own personal observations, doesn’t it? Everyone encounters overconfident people in their life, and it feels good to put them down with a reference to SCIENCE! So DKE has become a very popular and often misused and misunderstood concept. Here are a few things DKE is not, even if people say it is:

- It is not “unintelligent people think they are smart” – As described, DKE applies to anyone with low skill in a domain, even if they are capable in others, as when physicists turn into Armchair epidemiologists

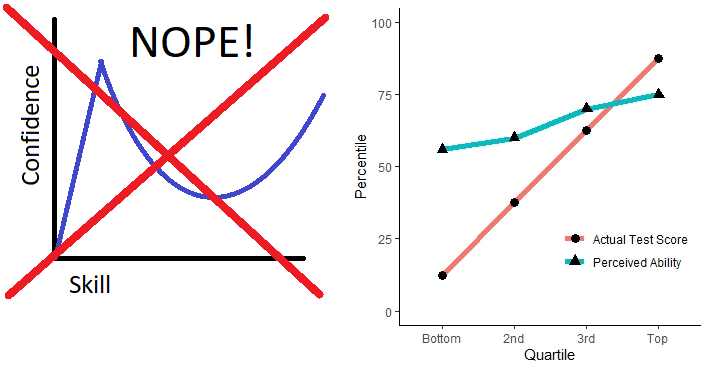

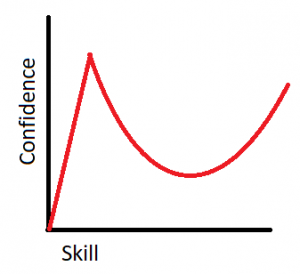

- It is not “amateurs think they are smarter than the experts” – DKE says the unskilled overestimate their skill on average, but only in a small minority of studies does the estimate from the bottom quartile put them in the top quartile. Which hasn’t prevented even Bloggers at Psychology Today to peddle the oft seen “alternative” DKE graph that is always just made up in MS paint, like mine.

- It is not that thing where you start studying a subject, think you’ve got it all figured out after the first two semesters, and realize just how little you know halfway through the third. This sometimes uses the same, pulled-from-the-anus graph.

- It is not the similar thing where the relationship between knowledge in a field and willingness to defend strong opinions about the field is weirdly non-linear.

They are close enough though, at least in spirit, to help make DKE as popular as it is, in both real and warped variations. David Dunning mentions some of these and the problem with them in this recent article on MSN: What is the Dunning-Kruger effect? And Dunning does have a paper about the overconfidence of beginners, with a graph with a very slightly resemblance to the Not a Dunning-Kruger graph, but since it’s not showing the Dunning-Kruger effect and that paper doesn’t have Kruger as an author, it should really be a Dunning-Sanchez graph.

And of course there’s the same problem one encounters with any finding of this kind. It describes patterns in messy data, not rules applying on the individual level. The variation in self-assessment varies greatly within each group, but the findings rely on the patterns of the means and is presented only through those means, so in public perception those means come to represent everyone and are applied to individuals.

Later work

So public perception is a mess. But what about the scientific perception? Well, although the paper has received criticism right from the start, there are also multiple studies replicating the result in other domains. What seems to be lacking in a lot of latter papers though, in my cursory review, is acknowledging the caveats in the original paper and the criticisms received since. The authors have responded to at least some of the criticism with papers and reanalyses, and I’m not competent to judge the quality of that, but most replications seem to stick with the original level of analysis.

There also seems to be limited recognition that the effect isn’t universal. Yes, it has shown up in a diverse selection of tasks, but also been shown to vary with the difficulty level of the task, with the domain, with the type of self-assessment, and also possibly with the cultural background of the test subject, although I haven’t been able to find a source for this last aspect that uses comparable methodology. So what have scientifically is an effect that seems to appear in a lot of circumstances, but is known to have limitations. And these limitations are largely unexplored, and unfortunately ignored by critics and fans alike. In other words, it’s a mess.

2. The Dunning-Kruger effect doesn’t exist, maybe

As I mentioned above, Dunning and Kruger acknowledged that the Better-Than-Average effect and regression towards the mean would have an effect on their studies, but believed the effect they observed was too large, and influenced by the introduction of training etc., to be due to these. Other researchers disagreed and although Dunning and Kruger have responded to previous criticism with reanalyses and additional studies, new papers critical of their findings keep appearing.

One such paper, written by Gilles E. Gignac and Marcin Zajenkowski, was published early this year in Intelligence and bears the confident title The Dunning-Kruger effect is mostly a statistical artefact: Valid approaches to testing the hypothesis with individual differences data. They use simulated data on IQ and self assessed IQ and supposedly find a Dunning-Kruger effect there that shouldn’t exist, and then they use real data on IQ and self assessed IQ to do a statistical analysis they believe should reveal any DKE, and find none.

Based on this they state that:

When such valid statistical analyses are applied to individual

differences data, we believe that evidence ostensibly supportive of the

Dunning-Kruger hypothesis derived from the mean difference approach

employed by Kruger and Dunning (1999) will be found to be substantially

overestimated.

The statistics in their approach seems to check out, but I think it’s worth to consider a few points:

- Much like previous critics, they go back to that first paper, and seem to ignore the authors’ responding papers.

- They have tested one domain, and extrapolate to all others

- The Dunning-Kruger effect they believe is overestimated is that there is a difference in degree to how much the least skilled and the most skilled misestimate their level. They still show a powerful mean overestimation in the least skilled group.

The first two speak to how this paper adds to the existing mess by ignoring the mess that already exists. And the third shows that even if the scientific hypothesis DKE might be on uncertain foundations, the popular perception of it would not necessarily be affected. Which brings me to the third conclusion I’d like to share.

3. Everyone should move past Kruger and Dunning (1999)

My favouritest of recent papers critical of the Dunning-Kruger effect is the first one I came across when I started searching for what the QI elves might be referring to. How Random Noise and a Graphical Convention Subverted Behavioral Scientists’ Explanations of Self-Assessment Data: Numeracy Underlies Better Alternatives by Nuhfer et. al. This paper does also suffer from some of the problems listed above. It focuses on one domain and it ignores all the elements of DKE research it isn’t directly focused on. But even if that means it fails, at least in my opinion, to refute previous DKE findings, it raises some important points and, again in my opinion, makes a good case for scientists in fields where DKE is relevant to abandon the concept and the approach popularized by that first paper, even with the modifications done since.

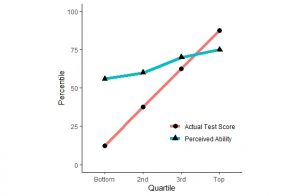

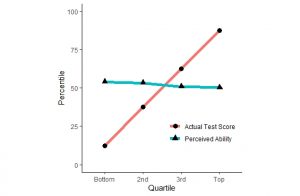

Let me explain by showing you another DKE type graph. At first glance it seems like a plausible outcome for a DKE type experiment, yes? Unless you have a lot of experience with such graphs, it will take you a little while to notice that it’s very flat, has a surprising downwards slope and that all the points are very close to the 50th percentile.

That is because, as you already know if you read the caption, it is random data. You can’t tell that straight from the graph though, because, as in most DKE type graphs, there is no visual representation of variance, although I suspect that for at least some DKE studies you would still find it hard to tell if the data was random or not.

This does not mean all DKE graphs are nonsense, but it does mean that “noise” in a self-assessment is visually similar to some aspects of the DKE in such a graph. It’s regression to the mean, compounded with the effect of sorting the data into quartiles on one axis and percentiles on the other. Combine that with floor and ceiling effects for the top and bottom quartile, and the better than average effect, a noisy self-assessment in an underpowered study is bound to produce something similar to the DKE.

The actual study in this paper is anything but underpowered though. Nuhfer et. al. have had several thousand students take their Science Literacy Concept Inventory (SLCI), and their results from analyzing that data is fascinating (e.g. taking science courses appear to not correlate with learning science literacy, but spending more time in university does), but this paper is based on a smaller subset of 1154 students and professors who have also done related self-assessments, mainly a Knowledge Survey of the SLCI (KSSLCI).

At the superficial level of visually inspecting a Dunning-Kruger type graph this data appears to show a weak DKE. (Figure 1 in the paper if you’re very interested.) Nuhfer et. al., based on a previous paper and simulations, contend that this is entirely due to floor and ceiling effects and focus on an alternative graphical representation and a different way of defining who is an expert.

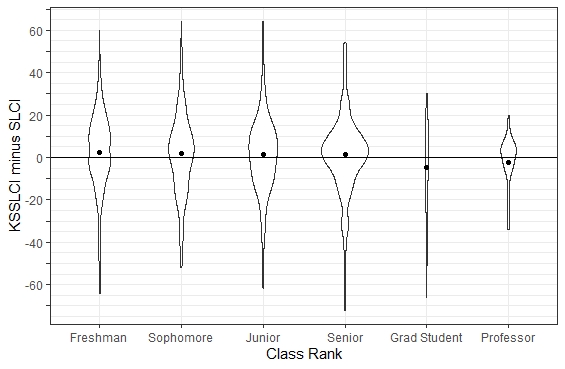

Let me first briefly describe their graphical representation. Since they have data on university students at all levels, as well as professors, they graph the over/under-confidence for each Class rank, and they show the spread. They also show the confidence interval for the mean.

Their choice of plotting software, or use of it, leaves a little bit to be desired for showing the spread, so I’m giving you my violin plot of their data instead. The dot shows the mean in each group, the width of the blob represents the number of individuals at the level. Overconfidence is up and 0 represents a precise assessment of one’s score.

It’s possible to see a weak trend, with overconfidence in Freshmen becoming underconfidence among Professors, but we also see that the spread in self-assessment is quite wide and Nuhfer et.al. show that the confidence intervals for most the means include zero. (Their graph does have that advantage over mine.)

For a lot of DKE papers the self-assessment is in the form of guessing where you would rank in your group. Nuhfer et al. have that type of self-assessment as well for this data material, and it shows a much more DKE-like result. But their contention is that this is a noisy and not particularly interesting form of self-assessment that will always show some level of DKE. Again I think they are treating past analyses a bit unfairly by focusing entirely on the graphical representation, but they still have a point. That you estimate yourself to be of average skill even when you’re not doesn’t necessarily mean you think you’re really good at it or on par with an expert, it means you think most people are as bad as it as you.

As I mentioned, in addition to alternative graphical representation, where they also suggest using histograms of the various sub-groups and show how similar understanding of the self assessment can be read from those, they offer a different way to discuss the self-assessment accuracy in a study. (If you are interested in the histograms, read the paper, this post is too long as it is.) By looking at the group that are arguably “real experts” the professors, they establish boundaries for “good”, “adequate” and “inadequate” self-assessment, and show that by those measures 43% of the Freshmen and Sophomores gave “good” self-assessments and 17% were “adequate”. A small number were extremely over- or underconfident, but not significantly one or the other, showing that the impression one might get from a DKE graph of the data would be misleading.

The paper of course goes into much more detail, the analysis of what should be considered a “good” self-assessment is part of a 17 page supplement, but I hope I’ve managed to summarize it in a not too confusing fashion.

In conclusion

As mentioned at the beginning, my trip through all these papers led me to three conclusions, which can be summed up like this: It’s time to leave the Dunning-Kruger effect behind. If you’re using it as an insult or to explain individual behavior you are over-simplifying to the extent that you are just wrong. If you are using it as the one explanation of differences between self-assessment and actual performance you are probably not producing results that are useful. Analyzing differences between self-assessment and actual performance have an important place in improving education, and education researchers are leading the way to better ways of presenting those differences than DKE-papers have been using for two decades.

looks at title Oh thank god someone is saying it. I did some minimal research into DKE years ago and was convinced, ironically enough, that people do not understand it as well as they think they do. I’ll have further commentary when I have time to read this in more detail.

A few thoughts:

1. The “Better than Average” effect is not generalizable to other cultures, and the opposite has been found in studies of East Asians. The obvious experiment, then, is to test East Asian subjects for DKE. If DKE theory is correct, then you’d expect people with low test scores to systematically underestimate their test scores even more than people high test scores do. This implies a highly nonlinear “perceived ability” curve. This isn’t impossible, but it sounds highly implausible to me.

A fundamental problem I have with the DKE is that there is a philosophical distinction between an accurate self-assessment, and the ability to produce an accurate self-assessment. If I’m a person of above average ability, and I assess my own ability by rolling ten dice and taking the average plus one, I might produce accurate results but it does not show my ability to produce accurate results. If everybody used this method of self-assessment, then you would find that people in the upper range have more accurate results, but it would be wrong to conclude that they have greater ability to produce accurate results. Dunning and Kruger have responded to “regression to the mean” criticisms by performing statistical tests, but I cannot see how any statistical test could possibly bridge the philosophical gap.

We are honored to be noted as “…favouritest of recent papers….” Thank you!

I really like the “Skepchicks” theme site. I would not have found it without your blog popping up under the Dunning-Kruger key-word search.

Our paper you cited was published in Numeracy because our focus was on the mathematical reasoning and the interpretations of mathematical artifact patterns produced in these graphs as the products of human behavior. We did not rest on simply the 1999 seminal paper or just the unique D-K type graph, but went through a series of papers through 2017 that used several types of graphs and looked for artifacts in paper after paper.

Wherever the math underlying the interpretation of paired measures diverges into innumeracy, context of what is self-assessed, whether it be science literacy or ability to climb a rope, the interpretations are built on quicksand and they all sink together. If one is to salvage the the D-K Effect, it’s not possible to do that with the numerical reasoning used to establish it. If psychologists want to make an argument to validate the original math, they now need to make their arguments in a peer-reviewed math journal.

That doesn’t mean that the 1999 paper was a bad piece of work. Not at all. Their paper was a pioneering paper, and such papers are never expected to get everything right. These papers introduce a new idea and perhaps a new concept. It’s normal to expect later researchers will further the knowledge and clean misunderstandings up. We have tried to be clear since our first 2016 paper in Numeracy that we were not deprecating the value of their work.

What did we change with our work? We believe that we refuted the claims made in several peer reviewed papers that most people are unskilled and unaware of it. As a whole, people are generally capable at self assessment.

We refuted the claim that the least competent people greatly overestimate their ability. Instead, we showed that both experts and novices underestimate and overestimate with the same frequency. It’s just that experts do that over a smaller range.

What does our work seem to show that Kruger and Dunning got right? One of the most important findings of the Kruger-Dunning paper is that self-assessment can be taught and learned. Although people’s self-assessment, on average, isn’t terrible, it can be made much better. Although most people are pretty good at self-assessment, a small number are not. We saw about 5-6% fit that descriptor of “unskilled and unaware of it” in our data. We validated that paired measures, the kind that Kruger and Dunning pioneered, give useful information about self-assessment. One just has to recognize the problems created by noisy data, collect a sufficiently sized database to tease out the self-assessment signal, and take care when choosing graphics to interpret. The seminal paper took an unfortunate turn in the numeracy of data interpretation, but others kept repeating that error for two decades, so it’s not as if the errors were that easy to spot.

After 2017, we moved past thinking of self-assessment measures as documenting people as incompetent and into seeing that self-assessed competence is surprisingly well-tied to demonstrable competence. So we started exploring, “What can we DO with the paired measures.” That is where our work lies now, and with much bigger data sets. We have found some extraordinary applications, one being in social justice and privilege, which seems to be a bit of a theme here at SkepChick. Group-means self-assessment ratings are astoundingly close predictors of mean demonstrated test scores on that same knowledge as shown here: https://www.improvewithmetacognition.com/paired-self-assessment-competence/. That confirmation enabled our seeing how the quality of self-assessment accuracy varied by different demographic groups. Paired Measures of Competence and Confidence Illuminate Impacts of Privilege on College Students https://scholarcommons.usf.edu/numeracy/vol12/iss2/art2/.

You will appreciate Jonathan Jarry’s recent work on Dunning-Kruger. Jarry is one of the most careful science writers out there. The process through which he developed this article is a model that should be taught to students in how to evaluate disruptive research that contradicts consensus: talk to the experts and draw on an independent expert to actually test the data for evidence. https://www.mcgill.ca/oss/article/critical-thinking/dunning-kruger-effect-probably-not-real

PS. Jarry also has an article on jade amulets. Subscribe to his McGill University site on cracked science. It’s free.

I liked it, but for someone supposed to be a careful science writer he seems to draw a strong conclusion with little evidence. I too initially found the “here’s a Dunning-Kruger graph, but it’s random data” somewhat shocking, but in looking at Dunning-Kruger’s paper(s) and other supporting papers they all show a bias in the Dunning-Kruger direction beyond that of random data. Nuhfer’s paper, which I also reference, is intriguing, but I believe it mostly just shows that we intuitively over-interpret this type of graph.

But they could all be correct and the other statistical tests performed in all of these papers could be wrong, but the way to show that isn’t to just look at that one style of graph. I think it’s a pity Dunning-Kruger’s raw data isn’t available (as far as I know) so that the statistical tests can be redone/criticized directly, but that doesn’t excuse just ignoring their existence.

The papers Jarry linked in Numeracy didn’t just test the infamous Dunning-Kruger graph. They also tested equally deceptive (y – x) vs (x) scatter plots, bar charts and histograms that were used to tout the Dunning-Kruger Effect in peer-reviewed psychology papers. The McKnight team enlisted by Jarry to try to debunk the papers by Nuhfer’s team verified the team’s conclusions by testing all of these graphs.

The original Dunning’s and Kruger’s database wouldn’t do you any good because the data bases they used in their four case studies are all too small to achieve reliability and thus reproducibility. The 2016 Numeracy paper makes the point that until an instrument produces a database large enough to establish reproducible reliability, meaning it can achieve consistent correlation with itself, it can’t correlate with a second measure any better than it can correlate with itself.

A worse case in such a study is that the data acquired really IS just 100% random noise, so the 2016 paper asked how big a data set one needs to achieve the reproducible results that yield the pattern of random noise on a Kruger-Dunning graph. They showed that a database of about 400 participants achieves that pretty well–putting 100 participants’ data in each quartile. The Numeracy papers used a database of real measures taken on over 1100 participants together with random noise simulations of the same-sized data set. That dataset has grown as shown in a later 2019 Numeracy paper and was still producing the same reproducible results That is what makes these papers so devastating. Further, Nuhfer’s team publishes their raw data in each one of their Numeracy papers in a downloadable Excel® file so that statisticians like you and the McKnights –or anyone– can verify the results. Just look for the ancillary appendices in the upper right when you open one of their papers in Numeracy, download their data and test away.

I’ve read several of Nuhfer’s group’s papers. I’ve played with their data. They make a very solid case that Dunning-Kruger doesn’t apply to their test for Science Literacy. But they only focus on the graphs. There is nothing in their paper about the tests for significance, the additional statistical analyses done in DK papers after the first or a recognition that DK was never thought to apply to all tests for all fields.

I’ve already described some of the other short comings in the blog post, so I wont repeat them here. Maybe the crew Jarry enlisted went beyond the naive approaches he describes in your link, but as presented it looks to me they are ignoring all the effort DK and others have done to answer previous criticism. Maybe their additional significance testing and more complex protocol design is insufficient, but ignoring that it exists doesn’t make me inclined to trust the newer critics.

The statement: “They make a very solid case that Dunning-Kruger doesn’t apply to their test for Science Literacy. But they only focus on the graphs” is surprising coming from a statistician.

Their papers are not contextual to whether paired measures of self-assessed competence and demonstrable competence come from self-assessing ability in science literacy, humor, critical thinking, grammar, or climbing a rope. The papers are in a peer-reviewed mathematics journal for a reason: they reveal the mathematical pitfalls inherent in making interpretations of ALL such paired measures data bounded within specific ranges (such as 0 and 100). While quality of the study does matter (participants understand what they are being asked to self-assess, getting a critical mass of data to establish reproducibility, verifying reliability of data represented in both paired measures) context of what is being self-assessed does not. The math for dealing with paired measures poses the same challenges regardless of context.

In addition, your blog is seriously misrepresenting their work by indicating that they never recognized differences between between real data and data simulating randomness. What they actually showed is (1) how to tease the self-assessment signal out of such noisy paired data and (2) how to look objectively at the self-assessment signal clarified from suppressing the noise. Their 2016 paper even presents their data in the way that Dunning-Kruger advocates do to show that their science literacy data would indeed support a Dunning-Kruger Effect if simply presented in that traditional way. (And that traditional way is still being employed in 2020 despite the fact that the mathematicians have affirmed that this is untenable.) Afterwards, the Numeracy authors explained why that traditional consensus is unacceptable mathematically and went on to show that the same data processed in ways that minimize generating the artifact patterns misinterpreted as human behavior show that humans are not bad at self-assessment.

One thing seems very clear. If psychologists want to salvage the Dunning-Kruger Effect, they will be unable to do that using the same mathematics that was used to establish it.

Some of what you are asking for appears to be in the Appendices (the PDFs, not the raw data spreadsheets) of the papers by Nuhfer and others in Numeracy in 2016, 2017, and 2019. However, the errors they found that undermined the Dunning-Kruger Effect, at their root, are in numeracy that comes from attending to the understanding of what the numbers actually mean, rather than from graphs that arise during manipulation of numbers via calculations. As indicated in the 2017 Numeracy paper:

“Measuring whether or not people are good judges of their abilities rests largely on numbers that result from simple arithmetic, namely subtraction. To quantify our abilities to self-assess accurately, we select a challenge, provide an estimate of our self-assessed ability to meet that challenge, and complete a direct measure of our competence in engaging the challenge. Obtaining our self-assessment accuracy requires nothing more than computing the difference between the two measures. While the computation could scarcely be simpler, the simplicity belies a surprisingly complex numeracy required to derive meaning from these numbers.”

In any event, as evidenced by their 2019 paper, research has moved on from arguing about the the validity of proclaiming that most people as “unskilled and unaware” to exploring the new opportunities that arise from recognizing that such is not the case.

Elsewhere, support for The “Effect” as it has been articulated since 1999 is simply unraveling, even in the discipline of psychology…just not yet within the literature of American psychology. See the following.

McIntosh, R. D., Fowler, E. A., Lyu, T., & Della Sala, S. (2019). Wise up: Clarifying the role of metacognition in the Dunning-Kruger effect. Journal of Experimental Psychology: General, 148(11), 1882–1897.

Gignac, G. E., & Zajenkowski, M. (2020). The Dunning-Kruger effect is (mostly) a statistical artefact: Valid approaches to testing the hypothesis with individual differences data. Intelligence, 80, 101449.

We seem to have reached the limits of direct threading of comments here, so I hope this one shows up in a sensible place.

Gignac, G. E., & Zajenkowski, M. (2020) is one of the two papers I mainly focus on in my post. I’m not going to reiterate my comments on it here.

McIntosh et. al. is a more interesting paper, I think, and I did look at it while researching this blog post, but I found the task chosen to a bit too close to “we’re going to look for our keys under this lamp post, because it’s too dark over there where we lost it”. And this blog post was cluttered enough as it was. But I’ll point out a few things now, admitting that my analysis is a layman’s as I’m not a psychologist or have the time to learn the specific statistical approach they are taking:

They, as I, point out that the regression to the mean effect identified by, among others Nuhfer, are easily controlled for and mention a number of papers that have done so, including Kruger & Dunning in 2002:

“This artefact is important to recognise, but relatively easy to eliminate. It is driven byunreliabilityin the measurement of performance, so it can be offsetstatistically by quantifying and controlling for this unreliability (Ehrlinger et al., 2008; Feld et al., 2017; Krueger & Mueller, 2002; Kruger & Dunning, 2002), orit can avoidedentirely by using separate sub-sets of trials to index performance and to calculate estimation error (Burson et al., 2006; Feld et al., 2017; Klayman, Soll, González-Vallejo, & Barlas, 1999). If either of these steps is taken, the DKE is attenuated, but it is not eliminated, so further factors must also be at work.”

Their study population is not very large. So if you want to criticize that for previous studies, you have to include it here.

Their study task, as I’ve already mentioned, seems to me, as a non-psychologist, to be qualitatively different from some of the main ones where DKE has been shown.

And in the end their conclusion is similar to what I commented about the Gignac paper:

“None of our findings cast doubt on the DKE as an empirical phenomenon. On the contrary, our data extend the classic pattern to novel domains. It is widely true that poor performers overestimate themselves more than high performers, and our data confirm that poor performers may indeed have less metacognitive insight than high performers”

Now since I already spent too much time examining DKE papers for what I first thought would be a simple blog post, and have now spent almost as much time to respond to comments on that post, I’m going to step back and let you have the last word if you wish.

Thank you, Bjørnar, for doing a blog for laypersons on self-assessment and engaging in some serious discussion. Since you’ve kindly granted me the last word, I’d like to use it to explain why the topic you started raises such a meaningful conversation. It goes well with the recent post by Rebecca Watson about whether to consider political opponents as stupid. I want to consider what the behavioral sciences’ consensus endorsing Dunning-Kruger encourages in our judgments of others and close with explaining some damage I believe it can promote in ourselves.

Google “Dunning-Kruger Effect.” My query today raised “About 757,000 results;” the lay public surely knows of Dunning-Kruger. The most frequent uses made of the Effect in those thousands of Google hits reveal that the public invokes the “Effect” to discredit groups of others as unskilled and unaware. Rebecca Watson does not invoke the Effect for that purpose, so perhaps your starting thread post helped her avoid doing so.

The credibility achieved through the status of “peer-reviewed publications”–two decades of them asserting that most people hold overly favorable views of their abilities–indicates that if one deprecates a large group as incompetent and unaware, the pejorative will likely be confirmed. That view rewrites human aspiration from its portrayal in “The Little Engine that Could” into a cynical view that becomes “The Little Engine that Shouldn’t Aspire to Much.”

The Effect combines claims that some possess not only expertise but also superior knowledge of themselves. Thus, the employment of the Effect carries the hubris of blanket judging groups of others as inferiors. Through that lens, viewing humanity offers a peer-reviewed rationalization for authoritarian control by the few “superiors” over the many “inferiors.”

Subscribing to the validity of the Effect can do particular damage to self. The message that communicates: “Most peoples’ views of their competence is unrealistic,” also supports: “I probably shouldn’t trust in myself either. My aspirations are probably overly optimistic.” To students striving to obtain knowledge or skills that are not easy to master, such self-doubt is deadly.

Without going off into the weeds on primary literature, a quality called “self-efficacy” or belief in one’s capacity to succeed through effort and teachers’ assistance is now well-established as essential to success in reaching one’s aspirations. John A. Ross at the University of Toronto showed that acquiring self-efficacy requires practice and experience in self-assessment. Self-assessment isn’t something to be seen as divorced from achieving success in learning.

The papers we have discussed in this thread show that most people are NOT “unskilled and unaware” and reveal that replicating the same flawed analyses used in the seminal paper accounts for “replicability” of the Effect in the two decades of subsequent publication. The recent papers are accessed primarily by scholars, not laypersons. Besides your blog post and Jonathan Jarry’s article, nothing much brings the latest research to the lay public that cautions them not to employ the Effect as a weapon and not to abandon believing in self or the validity of one’s aspirations.

It’s three months since I wrote this post. Perhaps I misremember and Nuhfer go beyond the graphical representations, so please point me to the parts of Nuhfer’s papers where they explain how the statistical significance tests used in the original and various improved DK papers are wrong or flawed. Note again that I too agree that a lot of supposed replications of DK are using the original rather weak approach, and that the sorting into quartiles is fundamentally problematic, but without DKs data and the ability to redo significance tests, the production of superficially similar graphs cannot be said to have refuted the findings in that first paper. And papers since have added layers to the protocol to account for previous criticism. Any critique that ignores this is, to my mind, woefully incomplete.

I tried some simulations myself, and though the graphs were superficially similar for small samples, very few runs produced graphs that were truly compatible with the Dunning-Kruger hypothesis.

Why are people trying to “attack” the Dunning-Kruger effect in a theoretical manner? Just go out, open your eyes, interact, and you’ll see the effect. As it is, clear as day, unmissable. Not as it is theorized to be.

Now, it takes some time to get enough data to incorporate it into your model, but it’s worth the effort. Note: Don’t generalize your data if you’re not competent in doing so. Just learn to identify the fools and you have the skill set you need to navigate more wisely (i.e. stop associating with fools, and stop being one yourself, in whatever capacity has been granted you thus far; it’s a positive feedback loop once you get going).

I can’t help but get the feeling that this is an apologetic article, granting some leeway for the overconfident idiots out there. The only method that is worthwhile for anyone that is over- or underconfident is to recalibrate their confidence.

Let’s say one of the hopeless cases wanders in here, to this article. You think it will help cement their overconfidence or help them recalibrate it?

Or is this article just your idea of trying to be a smart-ass?

Are you talking about the Dunning-Kruger effect as described by Dunning and Kruger? You must be, since that is the one being criticized in a theoretical manner. But that term from the science of neuro-psychology is not equivalent to “there are lots of overconfident idiots”. It specifically means “people are overconfident because accurately judging their own competence requires the competence they lack”.

Just going out and opening your eyes is a terrible way to do science by the way. It may be good for determining whether it’s raining, but if you just go out and open your eyes you will see that people using that method fall victim to all the different biases we people have. That is clear as day and unmissable.

As for what I think would happen if some overconfident hopeless case wandered in here and read this article … Well first of all this is not an article written to try to shake people out of mistaken overconfidence. But one possible outcome is that they would post some half baked criticism of it that showed that they entirely missed every single point made.

What did you think about the Nuhfer paper by the way? Ignore their criticism of D-K and look at their analyses. What do you think about their results?

(Dunno the markup for this site and there seems to be no simple way to find it. I’ll go with using “>” for quoting.)

Just to be clear, I never said “bothering to look is as trivial as mechanically opening your eye lids.” Did you genuinely think so?

Nullcred, The “Effect” that relegates the vast majority of people to having inflated views of their ability is a description of human behavior based on mathematical arguments. This blog uncovered discussions in a peer-reviewed mathematics journal showing that the underlying math is wrong and that the “unskilled and unaware of it” do exist, but they are not the majority of people. The people who really are unskilled and unaware of it don’t have to be numerous to cause enough damage to be memorable.

“Just going out and opening your eyes…” cannot change the laws of math. Suggest that you read a book called “The Unnatural Nature of Science,” which explains how science isn’t just “common sense.” Neither is the math underlying the “Effect.”

(I’ll try quoting you in brackets this time, the “>” notation seems funky compared to other sites!)

[The “Effect” that relegates the vast majority of people to having inflated views of their ability is a description of human behavior based on mathematical arguments.]

The Dunning-Kruger effect is specifically about more incompetent people being more prone to overconfidence. I can’t even begin to imagine that the illusory superiority effect (that most people are overconfident in most areas) is being questioned?

[This blog uncovered discussions in a peer-reviewed mathematics journal showing that the underlying math is wrong and that the “unskilled and unaware of it” do exist, but they are not the majority of people.]

Again, regarding that last statement, are you suggesting that the illusory superiority effect is in doubt? Recall that it is different from the Dunning-Kruger effect.

If you are simply saying that they have managed to make the effect go away (with legitimate mathematics, or not, I make no claims regarding that bit) from some parts of some of the studies that have been made, then I’d probably agree.

[The people who really are unskilled and unaware of it don’t have to be numerous to cause enough damage to be memorable.]

Indeed, those who shout the loudest tend to stand out, even if they are in a minority.

I’m not convinced that that is the case though. I still wouldn’t be, even if I were to buy the entire Nuhfer paper without any thoughts of my own, which I don’t. I wouldn’t have anywhere near enough data from that paper alone to conclude that “nope, the effect clearly isn’t there at all!”

What they have actually done (the data, and the models) seems to be good work though. Usually, it’s the conclusions / interpretations that are crappy. Just like the original Dunning-Kruger paper, and especially the interpretations and conclusions from the average Jaden who got their hands on it.

[“Just going out and opening your eyes…” cannot change the laws of math. Suggest that you read a book called “The Unnatural Nature of Science,” which explains how science isn’t just “common sense.” Neither is the math underlying the “Effect.”]

Just to be clear, are you saying that bad math is the only thing that supported the effect? That is, if we show that the math is bad (which Nuhfer et al probably have done), the effect is more likely to be non-existent because there is no data supporting it?

Regarding the reading tip, I’ll probably look into it, but I have a question as well: If someone who has studied science for more than 2 decades reads that book, what will they get out of it?

“People tend to hold overly favorable views of their abilities in many social and intellectual domains” (Kruger & Dunning, 1999)

•“People are typically overly optimistic when evaluating the quality of their performance on social and intellectual tasks. In particular, poor performers grossly overestimate their performances because their incompetence deprives them of the skills needed to recognize their deficits” (Ehrlinger, Johnson, Banner, Dunning, & Kruger, 2008).

Both quotes taken nearly a decade apart show that the claim, from the start, seems intended to apply to the majority of people, not to a minority. The papers in Numeracy explain why this is not true, but show that the label of “unskilled and unaware of it” applies to a small minority of people. What the papers do show is how dangerous it is to employ the Dunning-Kruger Effect as a pejorative to deprecate any large group of people. Doing so isn’t science and lacks the support of evidence in science and quantitative reasoning. Rather, the mathematics shows that such careless stereotyping is likely to be dead wrong.

The argument in the original paper was based on a graphic unique to that paper that displays random noise as mathematical artifacts that were easily described as human behavior. Unfortunately, that graphic continues to be used as recently (as I am aware; there may be still later uses) in 2020 (Figure 6 in https://journals.ametsoc.org/view/journals/bams/101/7/bamsD190081.xml.

A person who understands science is likely to get quite a bit out of The Unnatural Nature of Science. It received great reviews, was written by an author who authored cell biology texts and is one of the few books that makes a good distinction between science and technology.

At the risk of being obvious, I do not believe I have the appropriate knowledge to comment more than simply offer Professor Dunning’s personal view, in this chapter.

https://www.sciencedirect.com/science/article/abs/pii/B9780123855220000056

I wonder if many are Dunning Kruger about DKE

The objections to the claim that most people are overly optimistic about their self-assessed competence are not psychological; they are mathematical. If the validity of the D-K Effect is to be retained, the mathematical arguments used to establish the Effect should be revisited. The main place to understand the reasons for the objections lies in a peer-reviewed mathematics journal. Here is a compilation of the resources needed to understand the objections.

https://journals.asm.org/doi/10.1128/jmbe.v16i2.986

https://digitalcommons.usf.edu/numeracy/vol12/iss2/art2/

https://digitalcommons.usf.edu/numeracy/vol10/iss1/art4/

https://digitalcommons.usf.edu/numeracy/vol9/iss1/art4/

https://books.aosis.co.za/index.php/ob/catalog/book/279 Chapter 6

https://www.improvewithmetacognition.com/metacognitive-self-assessment/

https://www.improvewithmetacognition.com/paired-self-assessment-competence/

https://www.researchgate.net/publication/353908528_Self-reflexive_cognitive_bias plus comment

Latest reference from British Journal of Psychology.

Kramer, R. S. S., Gous, G., Mireku, M. O., & Ward, R. (2022). Metacognition during unfamiliar face matching. British Journal of Psychology, 00, 1– 22. https://doi.org/10.1111/bjop.12553

and still more…

Gignac, Gilles E. (2022). The association between objective and subjective financial literacy: Failure to observe the Dunning-Kruger effect. Personality and Individual Differences 184: 111224. https://doi.org/10.1016/j.paid.2021.111224

Magnus, Jan R. and Peresetsky, A. (October 04, 2021). A statistical explanation of the Dunning-Kruger effect. Tinbergen Institute Discussion Paper 2021-092/III, http://dx.doi.org/10.2139/ssrn.3951845

The European psychology profession is no longer afraid to question the numeracy underlying. “The Dunning-Kruger effect.” The American school of psychology where the D-K Effect originated and was popularized is looking increasingly like the American school of geology that tried to suppress the widespread acceptance of plate tectonics in the 1960s.

…and yet another…

Hofer, G., Mraulak, V., Grinschgl, S., & Neubauer, A.C. (2022). Less-Intelligent and Unaware? Accuracy and Dunning–Kruger Effects for Self-Estimates of Different Aspects of Intelligence. Journal of Intelligence, 10(1). https://doi.org/10.3390/jintelligence10010010